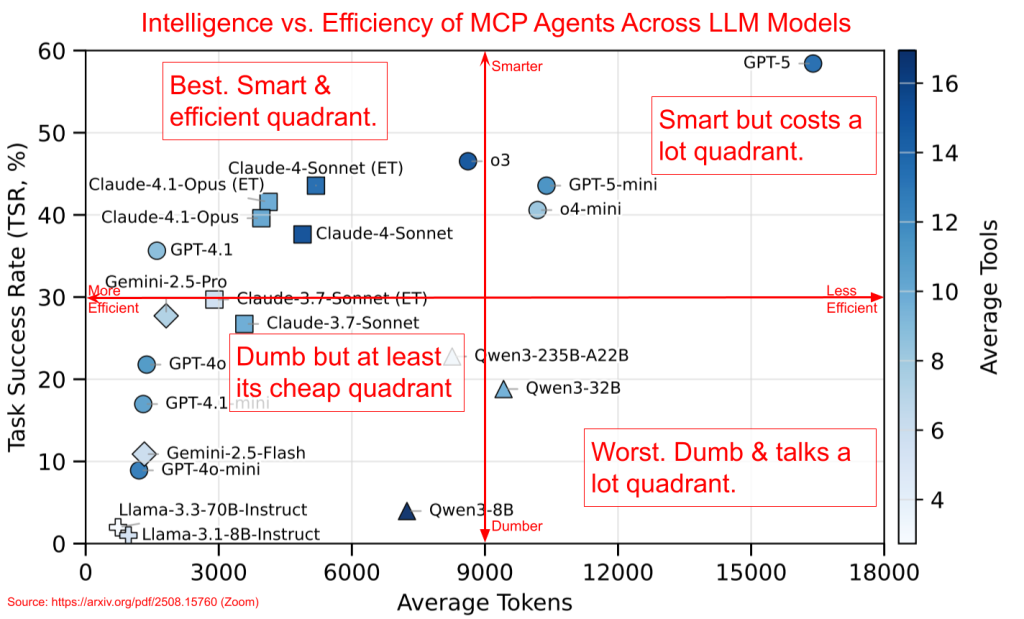

A team at Zoom recently published "Live MCP 101," a benchmark that stress tests MCP agents on challenging queries. It's one of the few benchmarks designed specifically for MCP environments.

Interestingly the paper plots token usage against task success rates, which is useful for comparing efficiency. But token count isn't the same as cost, since different providers charge different rates per token.

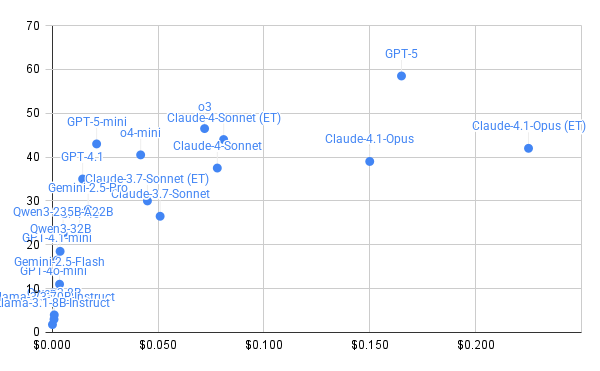

So I pulled the actual market pricing for each model and replotted the data to show what you'd actually pay. Some models shift position pretty significantly when you account for real pricing instead of just token count.

The video walks through the original chart, the cost adjustment, and what changes when you look at it through a cost lens.